That’s right …

If you are using the default code as your sitemap in Google Webmaster Tools, your site is missing out, for every single page that is over the 26 page limit. So say I have 150 pages on my Blogger blog, the math says I’m missing out on the opportunity to allow the Google spider to crawl 124 additional Blogger pages, on my blog!

I’m ready for change …

I actually found out that I was allowing my Blogger blog to be short changed by the Google spider, on one of my other Blogger blogs, & this wasn’t only a few pages, it was a huge amount of pages, in fact my blog was over 400 pages long, & out of those 400 pages I was only allowing the Google spider to crawl 26 of my latest blog post.

Whooooa… STOP THE BUS!

The default sitemap code looks like this (see red/bold text below):

1) http://YOUR_BLOG_NAME_HERE.blogspot.com/atom.xml?orderby=updated

The code above is all good, as long as you have less than 27 blog post, but the fact is you’ll quickly find that you will with no doubt exceed that maximum limit at some point in your blog.

From all my research & trial & errors, on all my Blogger blogs for well over a year & a half, I’ve found the code below to be a better alternative to the default Blogger sitemap code. The new code will break the sitemap down into multiples of 100, so for every 100 pages on my blog, I need to tweak the sitemap code a little bit.

New sitemap code (Google spider crawls the first 100 blog post)

1) atom.xml?redirect=false&start-index=1&max-results=100

So in the new sitemap code above, we start with the very first blog post (start-index=1) & now allow the Google spider to crawl to page 100 (max-results=100).

Say you have over 100 blog pages, what you need to do is submit additional sitemaps for the same blog at the Google Webmaster Tools website. As an example I have a Blogger blog that has over 400 pages, for that blog I have 4 sitemaps, that look like the code below.

1) atom.xml?redirect=false&start-index=1&max-results=100

2) atom.xml?redirect=false&start-index=100&max-results=100

3) atom.xml?redirect=false&start-index=200&max-results=100

4) atom.xml?redirect=false&start-index=300&max-results=100

Notice how each new sitemap starts at a higher start-index, by 100 blog post. So for the sitemaps I have, I’m covered for the first 400 blog post of my Blogger blog.

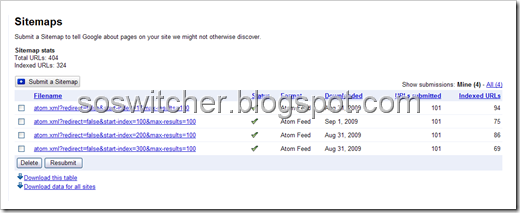

Below is a screenshot of my sitemaps, for one of my blogs that has over 400 pages.

NOTE:

When you submit sitemaps to Google Webmaster Tools, for a Blogger blog. Google won’t crawl your Blogger Labels, that’s the reason why every page isn’t being crawled in the screenshot above.

This new sitemap code has greatly improved how Google crawls my blog, I went from having only the first 26 pages of my blog crawled to now having 324 pages crawled by the Google spider.

I have internal links hard coded into my template, on every blog page, so it’s important to me to allow Google to crawl as many pages as possible, as often as possible. I also have widgets that have text that changes often, that text also is picked up by the Google spider, which allows my blog to show a massive amount of relevant keywords, for my Blogger Blog.

dxf file